By The E2P team at E2P@povertyactionlab.org

Well-designed randomized evaluations test theories and provide general insights about how programs designed to address poverty work. These insights, when combined with descriptive data and a deep understanding of the local context and institutions, pro- vide useful guides for policy design.

J-PAL’s new Evidence to Policy case studies explore how J-PAL affiliated researchers and staff, along with government and NGO partners, have drawn on evidence produced by randomized evaluations to inform policy changes around the world. The new section includes 17 case studies, with more on the way, that are meant to illustrate the numerous pathways through which randomized evaluations are shaping policy and practice, including by:

- Shifting global thinking: Knowledge generated by randomized evaluations has fundamentally shaped our understanding of social policies.

- Institutionalizing evidence use: Organizations, including governments and large NGOs, have institutionalized processes for rigorously evaluating innovations and incorporating evidence into their decision-making.

- Applying research insights: Lessons from randomized evaluations have informed the design of programs.

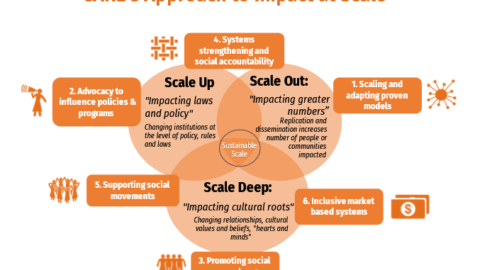

- Adapting and scaling a program: Programs originally evaluated in one context have been adapted and scaled in others.

- Scaling up an evaluated pilot: Partners have replicated and expanded a successful evaluated pilot to similar contexts.

- Scaling back an evaluated program: Partners have scaled down, redesigned, or decided to not move forward with programs that were evaluated and found to be ineffective.

To date, over 400 million people have been reached by programs that were scaled up after being evaluated by J-PAL affiliated researchers. Millions more have been impacted by the many other ways in which evidence from randomized evaluations has informed policy, but where beneficiaries are more difficult to count. Current examples include:

- A shift in thinking by donor and development agencies to eliminate user fees after more than a dozen randomized evaluations showed that charging fees for preventive health products, such as malaria bed nets, dramatically reduced take-up;

- The decision to scale back a biometric health provider monitoring program in Karnataka state, India, after an evaluation showed that the government was unwilling to enforce penalties associated with provider absenteeism;

- The decision to replicate and scale the “Graduation approach”–a multifaceted livelihood program first designed by Bangladeshi NGO BRAC–in multiple locations worldwide based on evidence that it has been shown to help the poorest women and their households transition into more sustainable livelihoods.

In addition, the website includes an Evidence to Policy resources page. Here you can find reflections from J-PAL affiliates and staff on how to leverage evidence to inform policy, as well as practical frameworks and guidance for adapting evidence from one context to another, assessing whether a program is ready for scale, understanding the value of evaluation in scaling policies, and more.